BakedSDF: Meshing Neural SDFs for Real-Time View Synthesis

SIGGRAPH 2023

-

Lior Yariv*

Weizmann Institute of Science

Google Research -

Peter Hedman*

Google Research

-

Christian Reiser

Tübingen AI Center

Google Research -

Dor Verbin

Google Research

-

Pratul P. Srinivasan

Google Research -

Richard Szeliski

Google Research -

Jonathan T. Barron

Google Research -

Ben Mildenhall

Google Research

Abstract

We present a method for reconstructing high-quality meshes of large unbounded real-world scenes suitable for photorealistic novel view synthesis. We first optimize a hybrid neural volume-surface scene representation designed to have well-behaved level sets that correspond to surfaces in the scene. We then bake this representation into a high-quality triangle mesh, which we equip with a simple and fast view-dependent appearance model based on spherical Gaussians. Finally, we optimize this baked representation to best reproduce the captured viewpoints, resulting in a model that can leverage accelerated polygon rasterization pipelines for real-time view synthesis on commodity hardware. Our approach outperforms previous scene representations for real-time rendering in terms of accuracy, speed, and power consumption, and produces high quality meshes that enable applications such as appearance editing and physical simulation.

Video

Real-Time Interactive Viewer Demos

Real Captured Scenes

BakedSDF

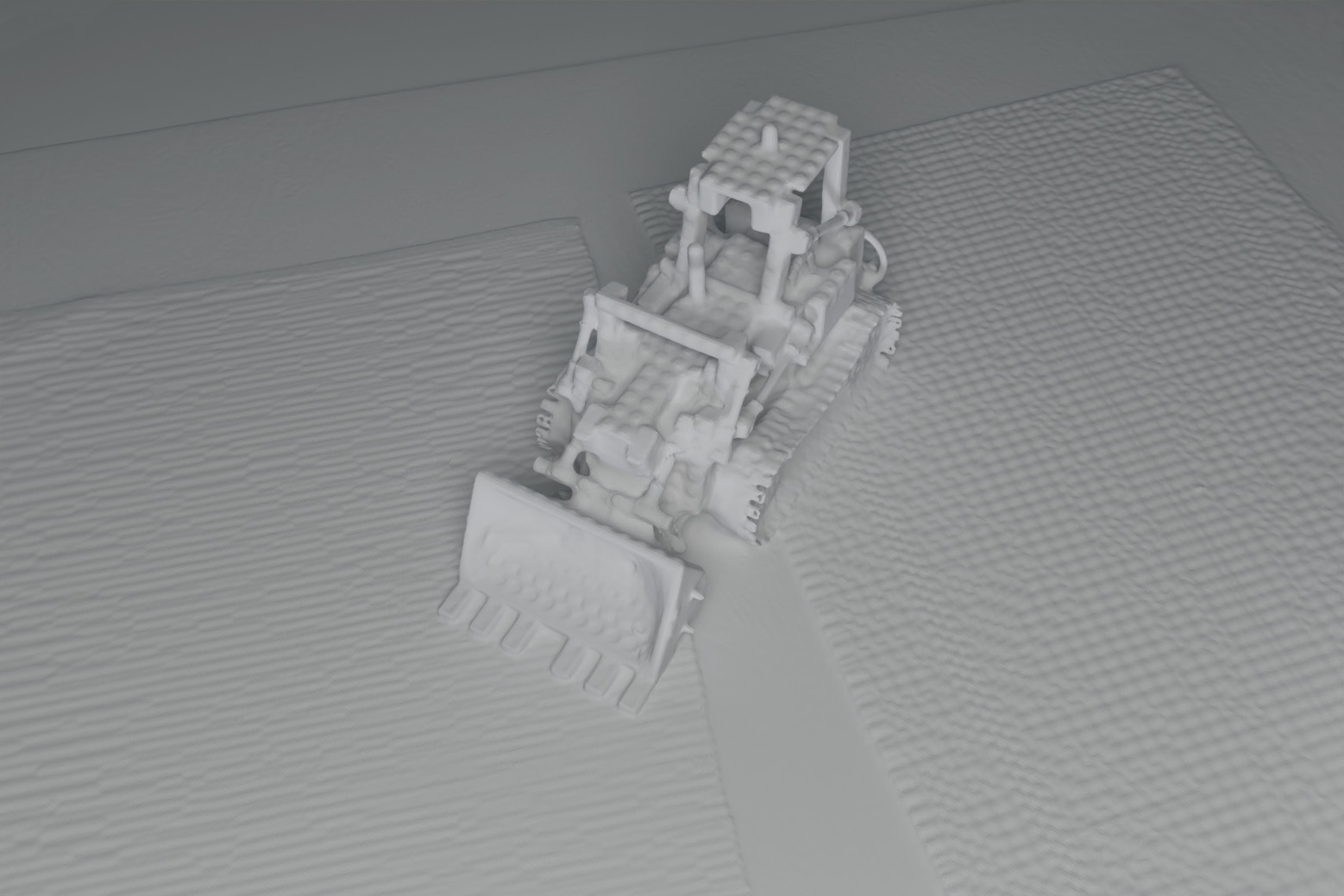

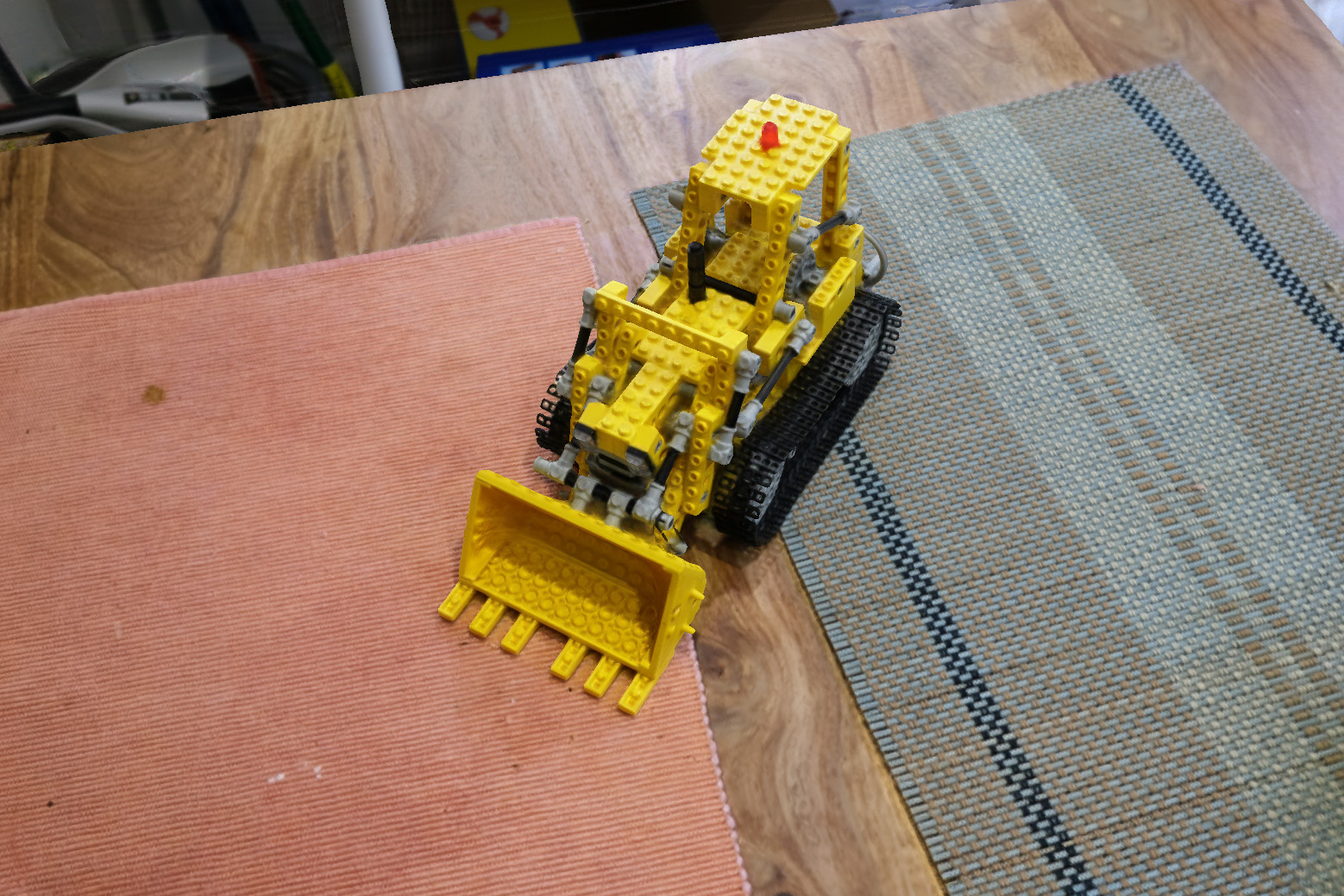

Our method bakes a hybrid VolSDF/mip-NeRF 360 scene representation into a triangle mesh, then optimizes a lightweight view-dependent appearance model to reproduce the training images. The final color of the rendered mesh is a sum of spherical Gaussian lobes (queried with the outgoing view direction) and a diffuse color. These parameters are stored per-vertex on the underlying mesh.

Mesh Extraction and Rendering

Citation

Acknowledgements

The website template was borrowed from Michaël Gharbi.